Alive and not alive

At the core of this project is the idea that new technologies are not alive in the same way as people, plants, and animals -- but nor are they inanimate like tables, rocks, and toasters. We attribute perception, intelligence, emotion, volition, even moral standing to social robots, computers, tutoring agents, tangible media, any media that takes -- or seems to take -- a life of its own.

Sometimes, we relate to technology not as a thing or an inanimate object, but as an other, a quasi-human. We talk to our technology rather than about the technology, moving from the impersonal third-person to the personal second-person, moving into social relation with the technology.

So, given that we perceive and interact with these technologies as if they are alive... are they? At what point do they become alive?

What does it mean for a technology to be alive?

How much does whether they are “actually” alive matter, and how much is our categorization of them dependent on how they appear to us?

Maybe they will not fit into our existing ontological categories at all.

Not things.

Not living.

Something in between.

Story

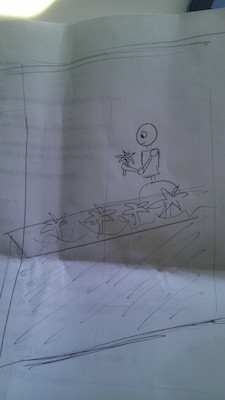

I explored the question of how to encounter the "aliveness" of new technologies through a set of life-size sequential art pieces.

The story followed several robots in the human world. Life-size frames filled entire windows. The robots ask about their own aliveness, self-aware and struggling with their own identity. They try to fit in, but don't. A wheeled robot looks sadly up at a staircase. A shorter wheeled robot sits in an elevator, unable to reach the elevator buttons. A stained-glass robot draws our attention to the personal connections we have with our technology.

Social robots. Virtual humans. Tutoring agents.

They are here. They are probably not taking over the world. They are game-changers and they make us think.

Perhaps they cannot replace people or make people obsolete. Perhaps they are fundamentally different. Perhaps they will be a positive force in our world, if done right. If viewed right. If understood as what they are. As something in between.

How will we deal with them? How will we interact? How will we understand them?

Medium

The story was created as a life-story story that the reader could walk through, so reading would felt more like walking down the hall having a conversation with the character than like reading.

I read Scott McCloud's great book, Understanding Comics, around the same time as doing this project. (Perhaps you can see the influence. Perhaps.) Comic-style, sequential art to promote a dialogue. An abstract character, because if you had an actual robot tell the story, something would be lost. Outlining the robot character in less detail, as more abstract, drew more attention to the ideas being conveyed, and let viewers project more of themselves onto the art.

The low-tech nature was partially inspired by ancient Chinese cut paper methods, as well as by some comics styles. The interaction between the flat, non-technological medium through which the story is told and the content of the story -- questions about technology -- calls attention to the contrast between living and thing. What is the role of technology in our lives?

Installation

Select frames from Am I Alive? were installed at the MIT Media Lab during The Other Festival.

Video

I made a short video showing the concept, making of the pieces for the installation, and photos of the installation. Watch it here!

Relevant research

If you're curious about the topic of how robots are perceived, here are a couple research papers you might find interesting:

-

Coeckelbergh, M. (2011). Talking to robots: On the linguistic construction of personal human-robot relations. Human-robot personal relationships (pp. 126-129) Springer.

-

Kahn Jr, P. H., Kanda, T., Ishiguro, H., Freier, N. G., Severson, R. L., Gill, B. T., Ruckert, J. H., Shen, S. (2012). “Robovie, you'll have to go into the closet now”: Children's social and moral relationships with a humanoid robot. Developmental Psychology, 48(2), 303.

-

Severson, R. L., & Carlson, S. M. (2010). Behaving as or behaving as if? Children’s conceptions of personified robots and the emergence of a new ontological category. Neural Networks, 23(8), 1099- 1103.