Relational AI: Creating long-term interpersonal interaction, rapport, and relationships with social robots

Children today are growing up with a wide range of Internet of Things devices, digital assistants, personal home robots for education, health, and security, and more. With so many AI-enabled socially interactive technologies entering everyday life, we need to deeply understand how these technologies affect us—such as how we respond to them, how we conceptualize them, what kinds of relationships we form with them, the long-term consequences of use, and how to mitigate ethical concerns (of which there are many).

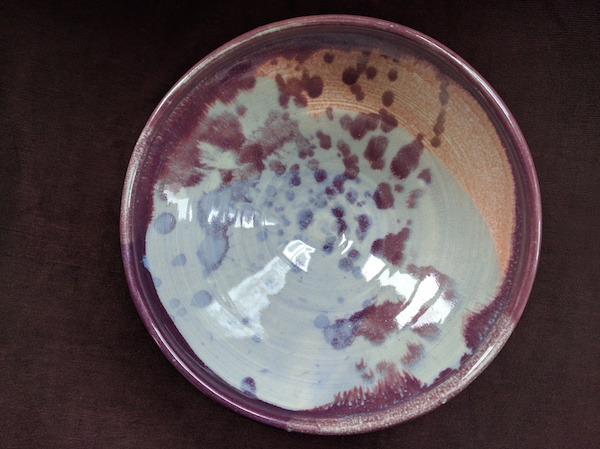

In my dissertation, I explored some of these questions through the lens of children's interacts and relationships with social robots that acted as language learning companions.

Many of the other projects I worked on at the MIT Media Lab explored how we could use social robots as a technology to support young children's early language development. When I turned to relational AI, instead of focusing simply on how to make social robots effective as an educational tools, I delved into why they are effective—as well as the ethical, social, and societal implications of bringing social-relational technology into children's lives.

Here is a précis of my dissertation. (Or read the whole thing!)

Exploring children's relationships with peer-like social robots

In earlier projects in the Personal Robots Group, we had found evidence that children can learn language skills with social robots—and the robot's social behaviors seemed to be a key piece of why children responded so well! One key strategy children used to learn with the robots was social emulation—i.e., copying or mirroring the behaviors used by the robot, such as speech patterns, words, even curiosity and a growth mindset.

My hunch, and my key hypothesis, was this: Social robots can benefit children because they can be social and relational. They can tap into our human capacity to build and respond to relationships. Relational technology, thus, is technology that can build long-term, social-emotional relationships with users.

I took a new look at data I'd collected during my master's thesis to see if there was any evidence for my hypothesis. Spoiler: There was. Children's emulation of the robot's language during the storytelling activity appeared to be related both to children's rapport with the robot and their learning.

Assessing children's relationships

Because I wanted to measure children's relationships with the robot and gain an understanding of how children treated it relative to other characters in their lives, I created a bunch of assessments. Here's a summary of a few of them.

We used some of these in another longitudinal learning study where kids listened to and retold stories with a social robot. I found correlations between measures of engagement, learning, and relationships. For example, children who reported a stronger relationship or rated the robot as a greater social-relational agent showed higher vocabulary posttest scores. These were promising results...

So, armed with my assessments and hypotheses, I ran some more experimental studies.

Evaluating relational AI: Entrainment and Backstory

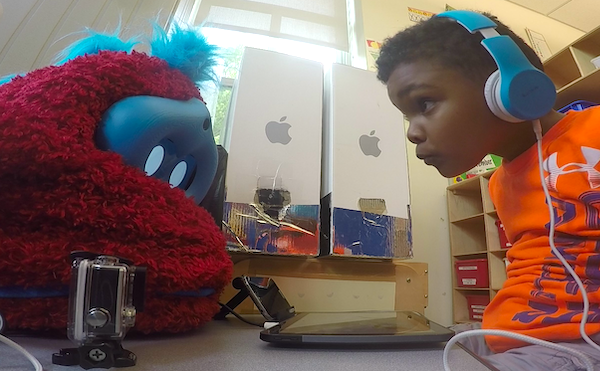

First, I performed a one-session experiment that explored whether enabling a social robot to perform several rapport- and relationship-building behaviors would increase children's engagement and learning: entrainment and self-disclosure (backstory).

In positive human-human relationships, people frequently mirror or mimic each other's behavior. This mimicry (also called entrainment) is associated with rapport and smoother social interaction. I gave the robot a speech entrainment module, which matched vocal features of the robot's speech, such as speaking rate and volume, to the user's.

I also had the robot disclose personal information, about its poor speech and hearing abilities, in the form of a backstory.

86 kids played with the robot in a 2x2 study (entrainment vs. no entrainment and backstory vs. no backstory). The robot engaged the children one-on-one in conversation, told a story embedded with key vocabulary words, and asked children to retell the story.

I measured children's recall of the key words and their emotions during the interaction, examined their story retellings, and asked children questions about their relationship with the robot.

I found that the robot's entrainment led children to show more positive emotions and fewer negative emotions. Children who heard the robot's backstory were more likely to accept the robot's poor hearing abilities. Entrainment paired with backstory led children to emulate more of the robot's speech in their stories; these children were also more likely to comply with one of the robot's requests.

In short, the robot's speech entrainment and backstory appeared to increase children's engagement and enjoyment in the interaction, improve their perception of the relationship, and contributed to children's success at retelling the story.

Evaluating relational AI: Relationships through time

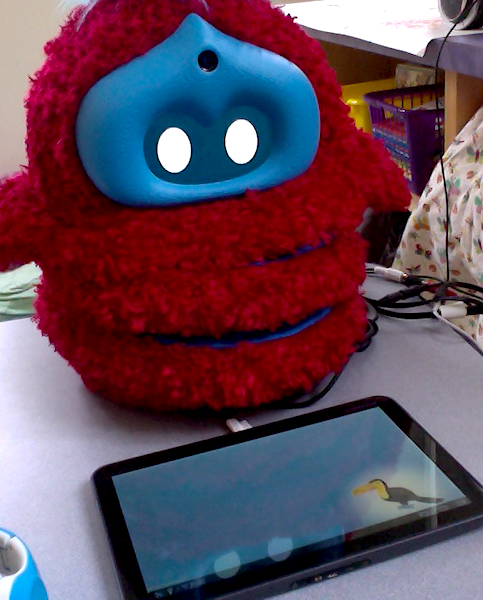

My goals in the final study were twofold. First, I wanted to understand how children think about social robots as relational agents in learning contexts, especially over multiple encounters. Second, I wanted to see how adding relational capabilities to a social robot would impact children's learning, engagement, and relationship with the robot.

Long-term study

Would children who played with a relational robot show greater rapport, a closer relationship, increased learning, greater engagement, more positive affect, more peer mirroring, and treat the robot as more of a social other than children who played with a non-relational robot? Would children who reported feeling closer to the robot (regardless of condition) more learning and peer mirroring?

In this study, 50 kids played with either a relational or not relational robot. The relational robot was situated as a social contingent agent, using entrainment and affect mirroring; it referenced shared experiences such as past activities performed together and used the child's name; it took specific actions with regards to relationship management; it told stories that personalized both level (i.e., syntactic difficulty) and content (i.e., similarity of the robot's stories to the child's).

The not relational robot did not use these features. It simply followed its script. It did personalize stories based on level, since this is beneficial but not specifically related to the relationship.

Each child participated in a pretest session; 8 sessions with the robot that each included a pretest, the robot interaction with greeting, conversation, story activity, and closing, and posttest; and a final posttest session.

Results: Relationships, learning, and ... gender?

I collected a unique dataset about children's relationships with a social robot over time, which enabled me to look beyond whether children liked the robot or not or whether they learned new words or not. The main findings include:

-

Children in the \textit{Relational} condition reported that the robot was a more human-like, social, relational agent and responded to it in more social and relational ways. They often showed more positive affect, disclosed more information over time, and reported becoming more accepting of both the robot and other children with disabilities.

-

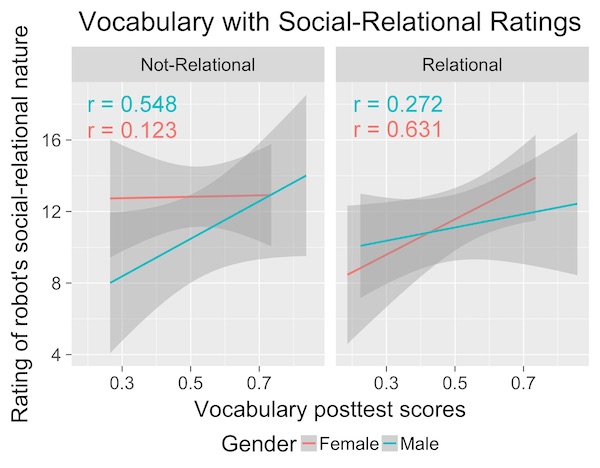

Children in the \textit{Relational} condition showed stronger correlations between their scores on the relationships assessments and their learning and behavior, such as their vocabulary posttest scores, emulation of the robot's language during storytelling, and use of target vocabulary words.

-

Regardless of condition, children who rated the robot as a more social and relational agent were more likely to treat it as such, as well as showing more learning.

-

Children's behavior showed that they thought of the robot and their relationship with it differently than their relationships with their parents, friends, and pets. They appeared to understand that the robot was an "in between" entity that had some properties of both alive, animate beings and inanimate machines.

The results of the study provide evidence for links between children's imitation of the robot during storytelling, their affect and valence, and their construal of the robot as a social-relational other. A large part of the power of social robots seems to come from their social presence.

In addition, children's behavior depended on both the robot's behavior and their own personalities and inclinations. Girls and boys seemed to imitate, interact, and respond differently to the relational and non-relational robots. Gender may be something to pay attention to in future work!

Ethics, design, and implications

I include several chapters in my dissertation discussing the design implications, ethical implications, and theoretical implications of my work.

Because of the power social and relational interaction has for humans, relational AI has the potential to engage and empower not only children across many domains—such as education, in therapy, and pediatrics for long-term health support—but also other populations: older children, adults, and the elderly. We can and should use relational AI to help all people flourish, to augment and support human relationships, and to enable people to be happier, healthier, more educated, and more able to lead the lives they want to live.

Further reading

Links

- I wrote about this project for the MIT Media Lab blog: Kids' relationships and learning with social robots

- I wrote about it more: Robots, Gender, and the Design of Relational Technology

- This work is discussed on the MIT Media Lab site

Publications

-

Kory-Westlund, J. M. (2019). Relational AI: Creating Long-Term Interpersonal Interaction, Rapport, and Relationships with Social Robots. PhD Thesis, Media Arts and Sciences, Massachusetts Institute of Technology, Cambridge, MA. [PDF]

-

Kory-Westlund, J. M., & Breazeal, C. (2019). A Long-Term Study of Young Children's Rapport, Social Emulation, and Language Learning With a Peer-Like Robot Playmate in Preschool Frontiers in Robotics and AI, 6. [PDF] [online]

-

Kory-Westlund, J. M., & Breazeal, C. (2019). Exploring the effects of a social robot's speech entrainment and backstory on young children's emotion, rapport, relationships, and learning. Frontiers in Robotics and AI, 6. [PDF] [online]

-

Kory-Westlund, J. M., & Breazeal, C. (2019). Assessing Children's Perception and Acceptance of a Social Robot. Proceedings of the 18th ACM Interaction Design and Children Conference (IDC) (pp. 38-50). ACM: New York, NY. [PDF]

-

Kory-Westlund, J. M., Park, H., Williams, R., & Breazeal, C. (2018). Measuring Young Children's Long-term Relationships with Social Robots. Proceedings of the 17th ACM Interaction Design and Children Conference (IDC) (pp. 207-218). ACM: New York, NY. [talk] [PDF]

-

Kory-Westlund, J. M., Park, H. W., Williams, R., & Breazeal, C. (2017). Measuring children's long-term relationships with social robots Workshop on Perception and Interaction dynamics in Child-Robot Interaction, held in conjunction with the Robotics: Science and Systems XIII. (pp. 625-626). Workshop website [PDF]