Starting a family in grad school

I wasn't married when I got to MIT, but I had a boyfriend named Randy who moved up to Boston with me. Two years in, we discover that it is, in fact, possible to simultaneously plan a wedding and write a master's thesis! Two years after that? I'm sitting uncomfortably in a floppy hospital gown at Mt. Auburn Hospital using my husband's phone to forward the reviews I'd just received on a recent journal paper submission, hoping labor doesn't kick in full force before I finish canceling all my meetings and telling people that I'll be taking maternity leave a month sooner than expected.

Baby Elian is born later that night, tiny and perfect. The next three weeks are spent writing my PhD proposal from the waiting room while we wait for Elian to grow big enough to leave the hospital's nursery.

Our decision to have a baby during grad school did not come lightly. For a lot of students, grad school falls smack in the middle of prime mate-finding and baby-making years. But my husband and I knew we wanted kids. We knew fertility decreases over time, and didn't want to wait too long. In 2016, I was done with classes, on to the purely research part of the PhD program. My schedule was as flexible as it would ever be. Plus, I work with computers and robotts—no cell cultures to keep alive, no chemicals I'd be concerned about while pregnant. Randy did engineering contract work (some for a professor at MIT) and was working on a small startup.

Was it the perfect time? As a fellow grad mom told me once, there's never a perfect time. Have babies when you're ready. That's it.

Okay, we agreed, now's the time. It'd be great, right? We'd have this adorable baby, then Randy would stay home most of the time and play with the baby while I finished up school. He'd even have time in the evenings and on weekends to continue his work.

Naiveté, hello.

Since my pregnancy was relatively easy (I got lucky—even my officemate's pickled cabbage and fermented fish didn't turn my stomach), we were optimistic that everything else would go well, too. The preterm birth was a surprise, sure, but maybe that was a fluke in our perfectly planned family adventure. Then it came time for me to go back to the lab full time. I'd read about attachment theory in psychology papers—i.e., the idea that babies form deep emotional bonds to their caregivers, in particular, their mothers. Cool theory, interesting implications about social relationships based on the kind of bond babies formed, and all that. It wasn't until the end of my maternity leave, when I handed our wailing three-month-old boy to my husband before walking out the door that I internalized it: Elian wasn't just sad that I was going away. He needed me. I mean, looking at it from an evolutionary perspective, it made perfect sense. There I was, his primary source of food, shelter, and comfort, walking in the opposite direction. He had no idea where I was going or whether I'd be back. If I were him, I'd wail, too.

Us: 0. Developmental psychology: 1.

Finding a balance

This was going to be more difficult than we'd thought. For various financial and personal reasons, we had already decided not to put the baby in daycare. Other people's stories ("when he started daycare, he cried for a month, but then he got used to it") weren't our cup of tea. But our plans of me spending my days in the lab while the baby was back at home? Not so much. In addition to Elian's distress at my absence, he generally refused pumped breast milk in favor of crying, hungry and sad.

So, we made new plans. These plans involved bringing Elian to the lab a lot (pretty easy at first: he'd happily wiggle on my desk for hours, entertained by his toes). Coincidentally, that's when I began to feel pressure to prove that what we're doing works. That I can do it. That I can be a woman, who has a baby, who's getting a PhD at MIT, who's healthy and happy and "having it all". "Having it all." No matter what I pick, kids or work or whatever, I'm making a choice about what's important. We all have limited time. What "all" do I want? What do I choose to do with my time? And am I happy with that choice?

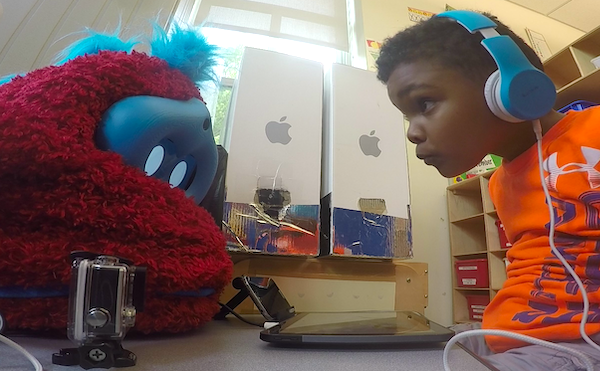

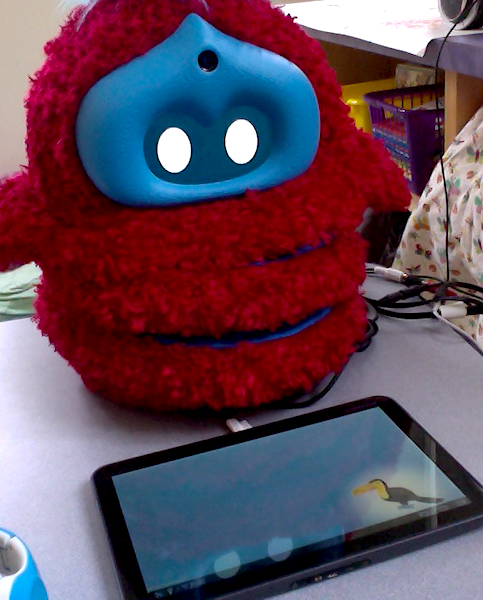

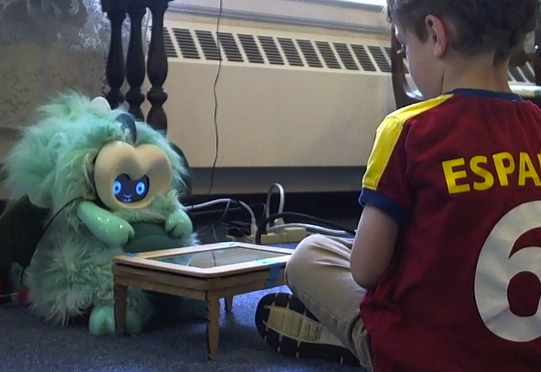

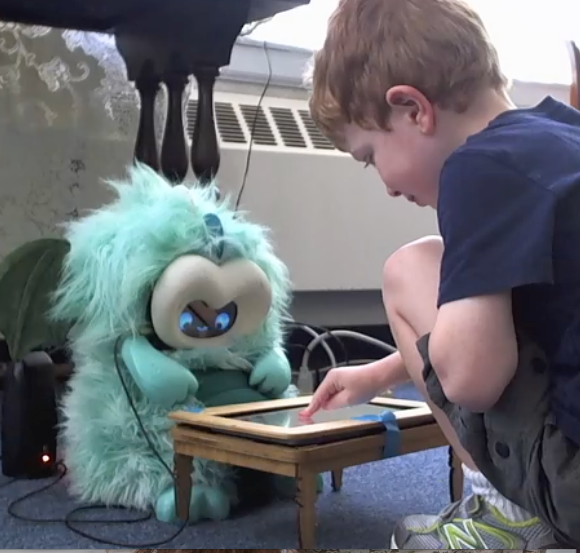

Now, Elian's grown up wearing a Media Arts & Sciences onesie and a Personal Robots Group t-shirt. I'm fortunate that I can do this—I have a super supportive lab group and I know this definitely wouldn't work for everyone. Not only does our group do a lot of research with young kids, but my advisor has three kids of her own. My officemate has a six-year-old who I've watched grow up. Several other students have gotten married or had kids during their time here. As a bonus, the Media Lab has a pod for nursing mothers on the fifth floor, and a couple bathrooms even have changing tables. (That said, it's so much faster to just set the baby on the floor, whip off the old diaper, on with the new. If he tries to crawl away mid-change, as is his wont these days, he can only get so far as under my desk.)

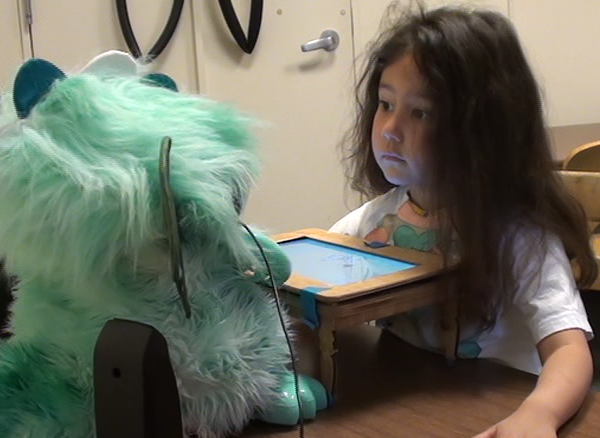

Randy comes to campus more now, too. It's a common sight to see him from the Media Lab's glass-walled conference rooms, pacing the hallway with a sleeping baby in a carry pack while he answers emails on his tablet. I feed the baby between meetings, play for a while when Randy needs to run over to the Green Building for a contractor meeting, and it works out okay. We keep Elian from licking the robots and Elian makes friends from around the world, all of whom are way taller than he is. The best part? He's almost through the developmental stage in which he bursts into tears when he sees them!

I also have the luxury of working from home a lot. That's helped by two things: first, right now, I'm either writing code or writing papers— i.e., laptop? check. Good to go. Second, my lab has undergone construction multiple times the past year, so no one else wants to work there either with all the hammering and paint fumes.

Stronger, faster, better?

But it's not all sunshine, wobbly first steps, and happy baby coos. I think it's harder to be a parent in grad school as a woman. I know several guys who have kids; they can still manage a whole day—or three—of working non-stop, sleeping on a lab couch, all-night hacking sessions, attending conferences in Europe for a week while the baby stays home. Me? Sometimes, if I'm out of sight for five minutes, Elian loses it. Sometimes, we make it three hours. Some nights, waking up to breastfeed a sad, grumpy, teething baby, it's like I'm also pulling all-nighters, but without the getting work done part.

Times when I'm feeling overwhelmed, I remember a fictional girl named Keladry. The protagonist of Tamora Pierce's Protector of the Small quartet, she was the first girl in the kingdom to openly try to become a knight—traditionally a man's profession (see the parallel to academia?). She followed the footsteps of another girl, Alanna, who opened the ranks by pretending to be a boy throughout her training, revealing her identity only when she was knighted. I remember Keladry because of the discipline and perseverance she embodied.

I remember her feeling that she had to be stronger, faster, and better than all the boys, because she wasn't just representing herself, she was representing all girls. Sometimes, I feel the same: That as a grad mom, I'm representing all grad moms. I have to be a role model. I have to stick it out, show that not only do I measure up, but that I can excel, despite being a mother. Because of being a mother. I have to show that it's a point in our favor, not a mark against us.

I remember Keladry's discipline: getting up early to train extra hard, working longer to make sure she exceeded the standard. I remember her standing tall in the face of bullies, trying to stay strong when others told her she wasn't good enough and wouldn't make it.

So I get up earlier, writing paper drafts in the dawn light with a sleeping baby nestled beside me. I debug code when he naps (even at 14 months, he still naps twice a day, lucky me). I train UROPs, run experimental studies, analyze data, and publish papers. I push on. I don't have to face down bullies like Keladry, and I'm fortunate to have a lot of support at MIT. But sometimes, it's still a struggle.

When I was talking through my ideas for this blog with other writers, one person said, "I'm not sure how you do it." I didn't have a good answer then, but here's what I should have said: I do it with the help of a super supportive husband, a strong commitment to the life choices I've made, and a large supply of earl grey tea.

—

This article originally appeared on the MIT Graduate Student Blog, February 2018