Summer at NASA

In 2011, the summer after I graduated college, I headed to Greenbelt, Maryland to work with an international team of engineers and computer scientists at NASA Goddard Space Flight Center. The catch: we were all students! Over forty interns from at least four countries participated in Mike Comberiate's Engineering Boot Camp.

Overview

The boot camp included several different projects. The most famous was GROVER, the Greenland Rover, a large autonomous vehicle that's now driving across the Greenland ice sheets mapping and exploring.

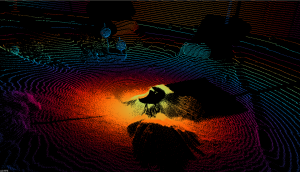

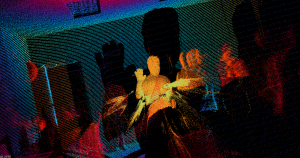

The main project I worked on was called LARGE: LIDAR-Assisted Robotic Group Exploration. A small fleet of robots -- a mothership and some workerbots -- used 3D LIDAR data to explore novel areas. My software team developed object recognition, mapping, path planning, and other software autonomously control the workerbots between infrequent contacts with human monitors. We wrote control programs using ROS.

Later in the summer, we presented demonstrations of our work at both NASA Wallops Flight Facility and at NASA Goddard Space Flight Center.

The LARGE team

-

Mentors: NASA Mike, Jaime Cervantes, Cornelia Fermuller, Marco Figueiredo, Pat Stakem

-

Software team: Felipe Farias, Bruno Fernades, Thomaz Gaio, Jacqueline Kory, Christopher Lin, Austin Myers, Richard Pang, Robert Taylor, Gabriel Trisca

-

Hardware team: Andrew Gravunder, David Rochell, Gustavo Salazar, Matias Soto, Gabriel Sffair

-

Others involved: Mike Huang, William Martin, Randy Westlund

Project description

The goal of the LARGE project is to assemble a networked team of autonomous robots to be used for three-dimensional terrain mapping, high-resolution imaging, and sample collection in unexplored territories. The software we develop in this proof-of-concept project will be transportable from our test vehicles to actual flight vehicles, which could be sent anywhere from toxic waste dumps or disaster zones on Earth to asteroids, moons, and planetary surfaces beyond.

The robot fleet consists of a single motherbot and a set of workerbots. The motherbot is capable of recognizing the location and orientation of each workerbot, allowing her to designate target destinations for any worker and track their progress. Presently, localization and recognition is performed via the detection of spheres mounted in a unique configuration atop each robot. Each worker can independently plot a safe path through the terrain to the goal assigned by the motherbot. Communication between robots is interdependent and redundant, with messages sent over a local network. If communication between workers and the motherbot is lost, the workers will be able to establish a new motherbot and continue the mission. The failure of any single robot or device will not prevent the mission from being completed.

The robots use LIDAR sensors to take images of the terrain, stitching successive images together to create global maps. These maps can then be used for navigation. Eventually, several of the workers will carry other imaging sensors, such as cameras for stereo vision or a Microsoft Kinect, to complement the LIDAR and enable the corroboration of data across sensory modalities.

Articles and other media

In the media:

-

Geeked on Goddard reported on the lab all summer! A series of five articles appears here. With videos!

-

GROVER, the Greenland Rover that was being developed by some of the students in the boot camp, headed out to the ice sheet in May 2013!

On my blog:

-

Don't ever stop - the start of the summer, an overview of the projects, and an inspiring quotes

-

Computer innards and radios - in which I learn many new things

-

Science vs. engineering - observations on myself vs. the engineers I worked with

-

Trip to Wallops - the lab took a trip to the beach to test out GROVER

-

Engineering Boot Camp videos - it seems some of these videos no longer work, but see the others below!

Videos

I spent the summer writing code, learning ROS, and dealing with our LIDAR images. Other people took videos! (Captions, links to videos, & credits are below the corresponding videos.) More may be available on Geeked on Goddard or from nasagogblog's youtube channel.

-

Meet the robots: A quick slideshow of the robots in the lab. Video from nasagogblog.

-

Getting ready for the beach: Preparations for our trip to NASA Wallops, where GROVER was tested on the beach. Video from nasagogblog.

-

GROVER on the beach: The Greenland ROVER during a test run on the beach, during our trip to Wallops. Video from nasagogblog.